The Infrastructure for Deployable AI Platforms

SyntaxMatrix is a full-stack Python framework for provisioning client-ready AI systems with integrated content, RAG, and machine learning capabilities.

Company Identity & Positioning

SyntaxMatrix exists at the intersection of infrastructure engineering and algorithm design, focusing on the delivery of production-grade AI platforms. Unlike hosted AI tools that lock users into rigid environments, SyntaxMatrix provides a full-stack Python framework for provisioning independent client instances. This approach allows organisations to own their AI stack entirely, from the web UI and role-based access controls to the underlying RAG pipelines and vector storage adapters.

Our primary focus is solving the 'rebuild' problem: the tendency for engineering teams to reconstruct the same foundational components—authentication, document ingestion, and chat interfaces—every time they launch a new AI product. By providing a repeatable platform baseline, we allow teams to focus on the unique logic of their domain rather than the plumbing of the system. We treat AI as an integrated component of a larger content and data ecosystem, ensuring that assistants are grounded in the specific realities of each client.

At the heart of our offering is the smxPP platform, a comprehensive suite of modules designed to turn complex AI requirements into deployable reality. We do not simply provide an API; we provide the shell, the logic, and the persistence layers required to run a professional AI service. This includes a robust Page Studio for content management and an ML Lab for deep data analysis, creating a unified environment for both users and administrators.

Our philosophy is rooted in the belief that client-owned AI platforms are the only way to achieve true auditability and control. By deploying SyntaxMatrix, organisations gain a system that is governed by their own security policies and integrated with their specific data sources. We are not a general service agency; we are an infrastructure company providing the tools to build the next generation of intelligent software.

Client-Owned Platforms

Provision independent AI instances with full control over data, branding, and access.

Mission and Vision

Our mission is to deliver engineering outcomes that matter today, providing the rigorous infrastructure necessary to bridge the gap between AI research and production reality. We focus on providing developers with the components they need to build secure, streaming-ready, and grounded AI applications without the overhead of architectural trial and error. By standardising the application shell and ingestion pipelines, we empower teams to ship faster while maintaining the highest standards of technical excellence.

We define success by the stability and utility of the systems our framework supports, ensuring that every deployment is scalable and resilient. This mission is driven by a commitment to technical transparency, where every module—from the vector store adapter to the role-aware navigation—is built to be understood and extended by the teams using it. We are building the tools that make AI a practical, manageable asset for the modern enterprise.

Our vision is a future defined by governed AI systems where every organisation can deploy composable platforms that are fully auditable and locally controlled. We believe the era of monolithic, opaque AI services will give way to a landscape of specialised, client-owned instances that prioritize data sovereignty. SyntaxMatrix aims to be the standard foundation for these systems, providing the modularity required to adapt to evolving model capabilities and regulatory requirements.

Long-term, we are working toward a world where AI is seamlessly integrated into every content and data workflow, moving beyond simple chat boxes to become active participants in organisational knowledge. By providing a platform where ownership and auditability are baked into the core, we are setting the stage for AI that is as trusted as the legacy software it enhances. Our goal is to ensure that as AI grows more capable, it also grows more predictable and manageable.

The Necessity of Structural AI

The current AI industry is plagued by the constant rebuilding of fragile RAG systems that fail to scale beyond the pilot phase. Most teams find themselves trapped in a cycle of writing custom ingestion scripts and managing ad-hoc UI components that lack proper governance or audit trails. This fragmentation creates significant technical debt, making it nearly impossible to maintain a consistent security posture or provide a reliable user experience across different client instances.

Furthermore, there is a profound separation between AI assistants and the content systems they are meant to interact with. When AI is treated as a separate 'add-on' rather than a core platform component, it loses the context and control required for enterprise-grade applications. This leads to hallucinations, poor document retrieval, and a general lack of trust from end-users who cannot verify the source of information or understand how the system reached a conclusion.

SyntaxMatrix was built to address these specific pain points by unifying the AI, the content, and the governance into a single framework. We eliminate the need to reinvent the wheel for every project by providing a battle-tested architecture that handles the complexities of document chunking, embedding generation, and role-based access control. This structure ensures that AI is not just a demo, but a robust part of the organisational infrastructure.

By focusing on a client-instance mindset, we enable organisations to deploy tailored AI environments that meet specific operational needs without sacrificing speed. Our framework provides the guardrails necessary to deploy AI with confidence, ensuring that data stays where it belongs and that every interaction is governed by the appropriate permissions. We exist to make AI predictable, repeatable, and ready for the demands of the modern enterprise.

Eliminate Technical Debt

Stop rebuilding foundational AI components and focus on your core product value.

The smxPP Platform Architecture

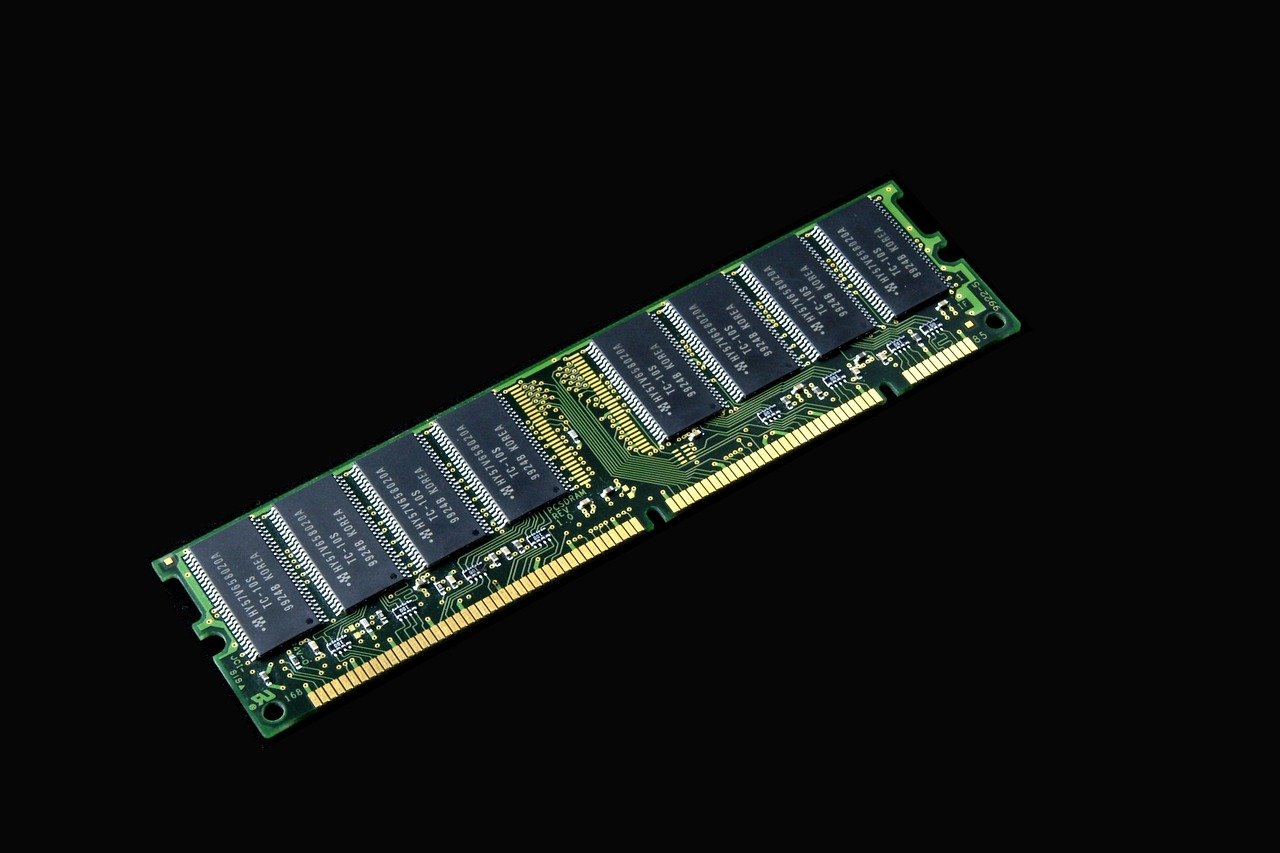

The smxPP platform is built on a modular, Flask-based application shell that provides the essential scaffolding for any AI-driven application. This architecture is designed to handle navigation, theming, and role-aware access control out of the box, ensuring that every client instance starts with a professional UI. The core of the system is highly extensible, allowing developers to enable or disable specific modules like the ML Lab or Page Studio depending on the client's requirements.

Internally, the platform utilizes a sophisticated subsystem interaction model where the content management layer communicates directly with the RAG pipeline. This allows pages created in the Page Studio to be instantly indexed and made available to the Chat Assistant for retrieval. The system is designed for high performance, supporting streaming outputs and tool-calling workflows that make AI interactions feel fast and responsive, regardless of the complexity of the task.

Persistence is handled through a pluggable storage layer, allowing organisations to choose the database and vector store that best fits their operational constraints. Our vector-store adapter approach means that a pilot can start on a local SQLite instance and transition to production-grade PostgreSQL with pgvector or Milvus without rewriting a single line of application logic. This flexibility is a core tenet of our architectural philosophy, providing a clear path from prototype to enterprise scale.

Security is baked into every layer of the architecture, with role-aware pathways ensuring that sensitive documentation and ML results are only accessible to authorised users. The documentation viewer is integrated directly into the app shell, rendering structured markdown and client assets securely. This integrated design ensures that the platform remains a single source of truth for both human users and AI agents, fostering a coherent and governed data environment.

Core Platform Capabilities

Our capabilities are designed around the actual workflows of engineering teams and business users. We do not offer abstract features; we provide concrete tools that solve specific operational challenges in the deployment and management of AI systems. Every capability is built to be manageable through a central interface, reducing the reliance on manual code changes for everyday tasks.

By integrating content management, deep analytics, and advanced retrieval into a single framework, we provide a holistic environment where AI can thrive. These modules are built to work in concert, ensuring that information flows seamlessly from document ingestion to the chat interface and into the ML Lab for further analysis.

Chat Assistant (smxAI)

Supports streaming, tool calling, and grounded RAG answers. It provides the interactive interface for users to engage with your organisation's specific knowledge base.

Knowledge Ingestion

Automated pipelines for text extraction, chunking, and embedding generation. This ensures your AI is always up-to-date with your latest documentation and data.

Page Studio

A full content management system for creating and publishing pages. It allows non-technical users to manage the website front-end without touching the codebase.

Documentation Viewer

Renders README files and technical assets directly in the app. It provides a structured, navigable area for internal or external documentation.

ML Lab & Exports

A dedicated environment for EDA and modelling. Upload CSVs, run experiments, and export professional HTML reports for stakeholders.

Vector Store Adapters

Switch between SQLite, Postgres/pgvector, Milvus, or Pinecone as you scale. This ensures your infrastructure grows with your data requirements.

Engineering Principles

Our principles are the foundation of our engineering culture and the primary drivers of our product decisions. In an industry often distracted by hype, we prioritise substance, predictability, and the long-term viability of the systems we build.

Sovereign Infrastructure

We believe every organisation should own their AI platform and its data. Our framework is designed for deployment within your controlled environment, not as a black-box service.

Composable Design

Systems should be built from independent modules that can be enabled or disabled. This prevents technical bloat and allows for tailored client deployments.

Audit-Ready AI

Trust is built on transparency. Our RAG pipelines and role controls ensure that every AI action is grounded and every user interaction is appropriately governed.

Growth-First Persistence

Infrastructure must scale without redesign. Our vector-store adapter approach ensures a smooth transition from pilot projects to massive production deployments.

Founders & Governance

SyntaxMatrix is led by a team dedicated to technical integrity and organisational stability. We believe that professional AI platforms require both deep engineering expertise and a rigorous approach to compliance and corporate governance. Our leadership ensures that SyntaxMatrix remains a trusted partner for enterprises looking to deploy AI with confidence.

Bobga Nti

Founder and Lead AI Engineer. Bobga focuses on the core architecture of SyntaxMatrix, drawing on extensive experience in system design and machine learning to build the smxPP framework. His work ensures that the technical foundation of every client instance is robust, scalable, and built on sound engineering principles.

Yvonne Motuba

Company Secretary and Governance Lead. Yvonne oversees the organisational stability and compliance frameworks that support our technical operations. Her focus on governance ensures that SyntaxMatrix meets the rigorous standards required by enterprise clients and that our internal processes are as reliable as the software we build.

Credibility & Roadmap

SyntaxMatrix is currently powering production-grade AI platforms that require high levels of availability and data grounding. Our framework has been meticulously designed to handle real-world document volumes and complex user interactions, providing a stable environment for both internal tools and client-facing applications. The smxPP framework is not just a collection of components; it is a battle-tested system currently delivering value across multiple industries.

Our premium and enterprise offerings provide the advanced features required for large-scale operations, including deep licensing controls, comprehensive audit trails, and granular deployment management. We offer dedicated support for private cloud deployments and custom open-model fine-tuning, allowing organisations to reach the highest levels of privacy and performance. These premium capabilities are designed to integrate seamlessly with the core framework, providing a clear path for growth as your AI needs evolve.

Looking ahead, our roadmap focuses on increasing the composability of the smxPP platform, adding new tool-calling capabilities and expanding our library of vector-store connectors. We are committed to remaining at the forefront of AI infrastructure, ensuring that our clients always have access to the latest advancements in retrieval and modelling. Our development cycle is driven by the feedback of our enterprise users, ensuring that every new feature serves a concrete operational purpose.

We are also expanding our documentation and developer tools to make it even easier for teams to customise and deploy their own SyntaxMatrix instances. By providing better abstractions and more robust testing environments, we aim to further reduce the time it takes to move from a conceptual AI product to a live, governed system. Our focus remains on stability, security, and the empowerment of engineering teams around the world.

Operational Questions

Find answers to the most common questions regarding the deployment and management of the SyntaxMatrix framework.

What is a client instance?

A client instance is an independent deployment of the SyntaxMatrix framework, complete with its own database, configuration, and branding. This ensures data isolation and allows for per-client customisation.

Where does our data live?

Your data lives within the persistence layer you choose for your deployment. Whether you use local SQLite or enterprise-grade Postgres, you maintain full control over the storage environment.

Can we deploy privately?

Yes. SyntaxMatrix is designed to run in standard Python environments and can be deployed in your private cloud or on-premise infrastructure to meet security requirements.

How does licensing work?

We offer flexible licensing models based on the number of instances and the level of support required. Premium tiers include advanced features like vector-store upgrades and fine-tuning workflows.

What do premium plans provide?

Premium plans include connectors for enterprise vector stores (Pinecone, Milvus), enhanced audit logs, and priority engineering support for custom deployments.

Start Building with SyntaxMatrix

Transition from experimental scripts to a governed, professional AI platform today. Our framework provides the reliability and structure your enterprise needs to ship AI products with confidence.

Talk to SyntaxMatrix

mailto:contact@syntaxmatrix.com

View Documentation

/docs